Edge Computing vs Cloud Technology is a strategic decision framework for balancing latency, security, and cost with the needs for scalability, resilience, governance, and predictable performance across distributed systems, devices, and modern applications that increasingly operate at the edge as data generation accelerates, demanding observable outcomes and auditable traces across diverse industries and geographies. As organizations assess where data should be processed, how quickly insights must be produced, and how regulatory requirements shape data residency, edge computing benefits come into sharper focus when low latency, local decisioning, and reduced bandwidth translate into tangible competitive advantages that scale with organizational complexity and cross-border operations across industries, geographies, and regulatory environments. Latency is a core differentiator, because processing at the edge minimizes round trips and enables near real-time actions for industrial automation, healthcare monitoring, and autonomous systems, whereas cloud-based processing introduces additional network hops that can delay critical decisions in time-sensitive scenarios, making data processing at the edge central to governance and resilience when networks falter. Hybrid cloud approaches blend on-site or edge resources with centralized cloud architecture, allowing latency-sensitive workloads to run close to the source while scalable analytics, archiving, and policy-driven governance continue in the cloud for long-term optimization, compliance, cross-site collaboration, and enterprise-wide strategic planning. Ultimately, a thoughtful mix of edge computing benefits and cloud architecture capabilities, guided by data gravity and business requirements, helps organizations design resilient, cost-efficient solutions that meet performance targets without sacrificing governance or security, providing a practical path for incremental modernization and continuous ROI.

Another way to frame the discussion is to contrast on-device processing with centralized cloud services, a spectrum widely explored in fog computing and distributed analytics contexts. In this view, latency-sensitive tasks stay at the network edge, while deeper insights are harvested in the cloud, supported by a hybrid cloud strategy that preserves performance without sacrificing governance. Organizations map workloads to micro data centers or edge nodes, enabling local data processing and secure, scalable data aggregation in the cloud for long-term analysis. By acknowledging related terms such as local processing, edge nodes, central analytics, and on-demand resources, teams can communicate clearly about architecture while pursuing a practical migration path across edge, cloud, and hybrid configurations.

Edge Computing vs Cloud Technology: Optimizing Latency and Data Processing at the Edge

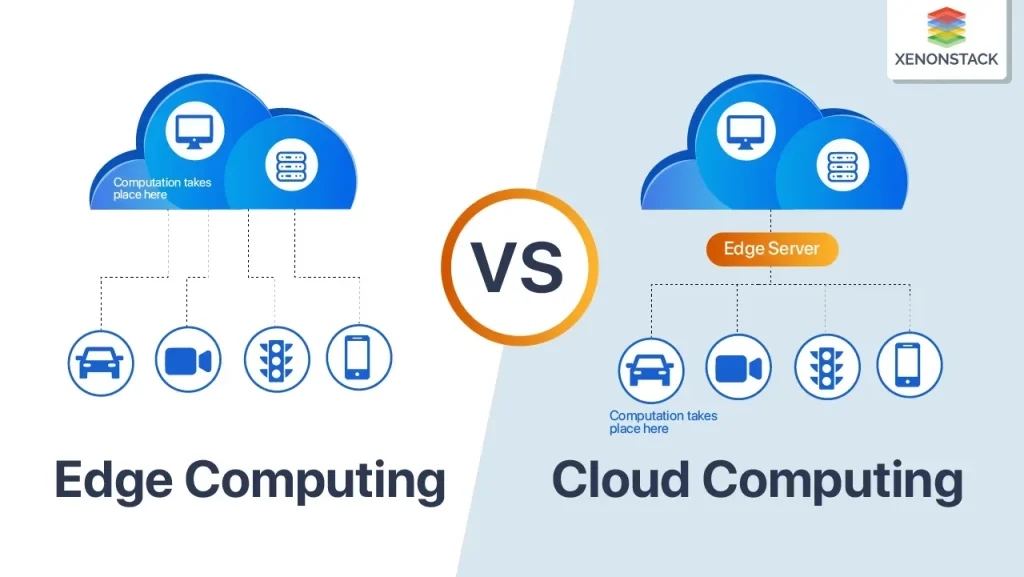

Edge computing decouples data processing from centralized data centers by moving compute closer to sensors, devices, and endpoints. This proximity slashes latency, enabling real-time or near-real-time decision-making for use cases such as autonomous vehicles, industrial automation, and smart healthcare devices. The edge computing benefits include faster responses, reduced bandwidth consumption, and enhanced data privacy since sensitive information can be analyzed locally before transmission.

That said, edge computing introduces management complexity and requires specialized hardware at the edge. Many organizations therefore pursue a hybrid approach: keep latency-sensitive workloads at the edge while pushing heavy analytics and archival storage to the cloud. In this hybrid setup, cloud architecture provides elastic scalability, global visibility, and a robust service ecosystem, while data processing at the edge minimizes data gravity and bandwidth costs.

Hybrid Cloud and Cloud Architecture: Designing a Scalable, Low-Latency Hybrid Strategy

A well-designed hybrid cloud strategy blends on-premises or edge processing with cloud services, letting you run time-critical tasks close to the data source while leveraging cloud-scale analytics and governance for broader insights. From a cloud architecture perspective, this approach supports consistent security controls, unified identity management, and simplified interoperability across environments, delivering resilience and scalability with controlled latency.

To implement effectively, start with defining workload profiles, data gravity, and regulatory constraints. Use edge gateways or micro data centers to preprocess data and determine what belongs on-site versus what should be sent to the cloud. Establish a unified orchestration layer to automate deployment, updates, and monitoring across edge and cloud, ensuring observability and cost efficiency through continued optimization of latency, bandwidth, and total cost of ownership.

Frequently Asked Questions

Edge Computing vs Cloud Technology: How does latency influence the choice between edge processing and cloud architecture?

Latency is a fundamental factor in Edge Computing vs Cloud Technology. Edge processing reduces latency by performing data processing at the edge, enabling near-instant decisions—a core edge computing benefit for time-sensitive workloads. Cloud architecture centralizes heavy analytics and orchestration but can add latency due to data transfer. In practice, use edge computing benefits for real-time or intermittent connectivity scenarios, and reserve cloud architecture for large-scale analytics and long-term storage; many organizations adopt a hybrid approach to balance both.

Edge Computing vs Cloud Technology: When is a hybrid cloud strategy advantageous for edge workloads?

A hybrid cloud strategy often provides the best balance for Edge Computing vs Cloud Technology. It lets you apply data processing at the edge to meet latency requirements while using the cloud for scalable analytics, model training, and governance. Key steps include mapping data flows, establishing data orchestration between edge and cloud, and enforcing consistent security controls across environments. By aligning workload characteristics, data gravity, and regulatory needs, you can maximize edge computing benefits and leverage cloud architecture wherever it adds value, achieving a flexible hybrid cloud.

| Aspect | Edge Computing | Cloud Technology |

|---|---|---|

| Definition | Processing data near the source to reduce latency; enables local decision‑making and resilience. | Centralized, scalable resources delivered over the internet; on‑demand provisioning and global analytics. |

| Latency | Low latency for real‑time or near real‑time decisions. | Higher latency due to network hops; not ideal for time‑sensitive decisions at the edge. |

| Bandwidth/Data Transfer | Minimizes data transmitted; send only relevant data or summaries. | Cloud handles larger data volumes; higher bandwidth usage for raw data. |

| Reliability/Connectivity | Operates with intermittent connectivity; local processing persists. | Depends on network availability; centralized services rely on connectivity. |

| Security & Governance | Data sovereignty possible; expanded attack surface across many edge devices; governance distributed. | Centralized security controls; easier governance and compliance management when data is aggregated in one place. |

| Cost Dynamics | Capex on edge devices; potential savings on data transfer and ongoing cloud costs. | Pay‑as‑you‑go model; scalable resources; data transfer costs to consider. |

| Suitability/Use Cases | Industrial IoT, real‑time monitoring, autonomous systems; local decisioning. | Big data analytics, AI training, global collaboration, centralized dashboards. |

| Hybrid Approach | Often best with edge for latency‑sensitive tasks; cloud for analytics. | Hybrid or cloud‑first strategies with edge gateways for latency‑critical workloads. |

| Security & Compliance (Overview) | Edge security requires device hardening, encryption, and governance across distributed devices. | Cloud security and governance with data localization considerations. |

Summary

Edge Computing vs Cloud Technology presents a spectrum rather than a binary choice. By understanding the strengths and limitations of each approach, organizations can craft architectures that optimize latency, data governance, scalability, and total cost of ownership. Begin with a clear assessment of workload types, data gravity, and regulatory requirements, then design a hybrid strategy that uses edge computing benefits for time‑sensitive processing and cloud resources for heavy analytics and centralized governance. The aim is not to choose one over the other but to create a balanced, adaptable architecture that meets business goals today and remains flexible for tomorrow. If you engineer with a cohesive governance model and a well‑defined data flow, you’ll be well‑positioned to harness the best of both Edge Computing vs Cloud Technology and drive meaningful outcomes across your organization.